The documentation here show’s that promptql supports gemini and can be configured in the config. The language API also has a setting for selecting model providers but returns an error when you try to use gemini. When will gemini be supported in the API?

Here is the error I got when I tried to use Gemini

PromptQL API error: Error: PromptQL API error: 422 - {"detail":[{"type":"union_tag_invalid","loc":["body","llm"],"msg":"Input tag 'gemini' found using 'provider' does not match any of the expected tags: 'hasura', 'openai', 'anthropic'","input":{"provider":"gemini","model":"gemini-2.0-flash","api_key":"<redacted>"},"ctx":{"discriminator":"'provider'","tag":"gemini","expected_tags":"'hasura', 'openai', 'anthropic'"}},{"type":"union_tag_invalid","loc":["body","ai_primitives_llm"],"msg":"Input tag 'gemini' found using 'provider' does not match any of the expected tags: 'hasura', 'openai', 'anthropic'","input":{"provider":"gemini","model":"gemini-2.0-flash","api_key":"<redacted>"},"ctx":{"discriminator":"'provider'","tag":"gemini","expected_tags":"'hasura', 'openai', 'anthropic'"}}]}

at execute (lib/apis/promptQL/client.ts:448:18)

Hiya, @ma2ran — thanks for the question!

Yes, PromptQL supports Gemini models as you found in the docs here.

Can you share a bit more information to help me unblock you?

- It would be helpful for you to provide your

promptql_config.yaml file

- When was the project created?

- When you say,

The language API also has a setting for selecting model providers, can you be more specific? There should only be one place where you need to set your configuration, as explained by the docs you linked.

From digging through a bit of history internally, @ma2ran — I have a strong inclination this project is quite old (by our standards) and predates support for Gemini.

You should be able to delete the Docker image for promptql_playground and rebuild it when running ddn run docker-start.

This will pull the latest image (which supports Gemini) and should work with your config.

Here’s some extra info

- Here is my promptql_config

kind: PromptQlConfig

version: v1

definition:

llm:

provider: gemini

model: gemini-2.0-flash

api_key: *************************************

ai_primitives_llm:

provider: gemini

model: gemini-2.0-flash

api_key: *************************************

- The project was initialized 3 months ago. I’ve been updating it since with new connections. The latest update was yesterday.

- I was trying to set it using the promptql language API here - Natural Language API | Hasura PromptQL Docs.

oh snap, yea I can try deleting my old prompql_playground image.

Another question I had was, how do I pass environment variables for my Gemini API key into the promptql_config.yaml file? I just put the raw string in for testing.

Update

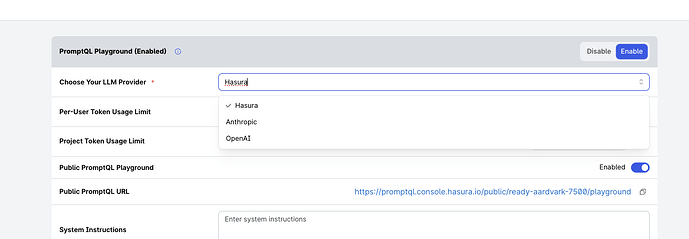

I’ve deleted my old project and rebuilt the container but I still see no difference. The LLM provider is still on hasura and there’s no gemini option.

Unfortunately, I see where the confusion is:

- The screenshot you shared is for your hosted Hasura Cloud project.

- The configuration in your

promptql_config.yaml is for your local instance.

- It’s not very clear, but these are two separate configurations.

- I’m not sure why the dropdown doesn’t provide other options, but I’ve reached out to the team and will get back to you in this thread.

For the problem at hand, you should be able to validate that the configuration file indeed uses your Gemini configuration by running an API query against the newest build from the new image and seeing if the same error is returned.